The New AI Stack: From Foundation Models to Startups Building on Top

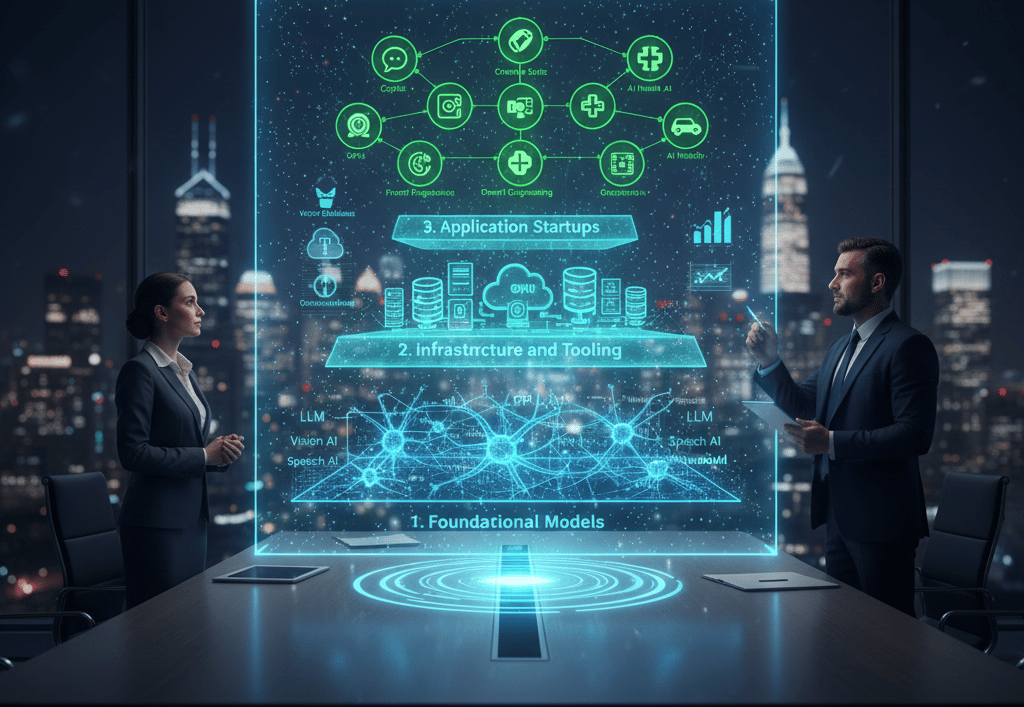

This article provides a comprehensive map of the AI technology stack as it stands today, breaking down the ecosystem into three distinct layers. It examines the foundation model providers like OpenAI, Anthropic, and Google at the base; explores the critical infrastructure layer including vector databases, orchestration frameworks, and observability tools; and surveys the explosion of application startups building specialized AI products across enterprise software, customer service, productivity, and creative tools. The piece analyzes the competitive dynamics between layers, addresses the defensibility question facing application startups, and offers perspective on how this emerging stack compares to previous technology paradigm shifts.

7/3/20233 min read

The artificial intelligence landscape has evolved dramatically over the past year, crystallizing into a distinct technology stack that mirrors the infrastructure layers we've seen in previous computing paradigm shifts. As we reach the midpoint of 2023, a clear picture is emerging of how the AI ecosystem is organizing itself—from foundational models at the base to specialized applications at the top.

Layer 1: Foundation Model Providers

At the foundation of this new stack sit the companies training large language models and multimodal AI systems. OpenAI remains the dominant player with GPT-4, released in March, which powers ChatGPT Plus and is available via API. Anthropic entered the arena strongly with Claude, emphasizing safety and longer context windows. Google has responded with PaLM 2 and Bard, while Meta released Llama 2 just last month as an open-source alternative.

These foundation models represent billions of dollars in compute investment and require massive datasets, making them natural monopolies. However, the landscape is more diverse than many predicted, with open-source models from Stability AI, Hugging Face, and others ensuring competition and accessibility.

Layer 2: Infrastructure and Tooling

Between foundation models and applications, a crucial infrastructure layer has emerged rapidly. Vector databases like Pinecone, Weaviate, and Chroma have become essential for building AI applications that need semantic search and long-term memory. These databases store embeddings—numerical representations of text—enabling efficient similarity search across millions of documents.

Observability and monitoring tools are another critical category. LangSmith from LangChain, Weights & Biases, and Helicone provide developers with visibility into their AI application performance, token usage, and model behavior. As AI systems become more complex, understanding why they produce specific outputs becomes crucial for debugging and improvement.

Orchestration frameworks like LangChain and LlamaIndex have become indispensable for developers building complex AI workflows. These tools handle prompt management, chain multiple model calls together, integrate with vector databases, and manage context windows—solving common problems so developers don't have to reinvent the wheel.

Evaluation and testing infrastructure is emerging as another essential component. Companies like Humanloop and Scale AI are building tools to test AI outputs systematically, comparing model performance and detecting regressions—critical capabilities as AI applications move into production.

Layer 3: Application Startups

The application layer is exploding with startups applying foundation models to specific use cases. In enterprise software, companies like Jasper (marketing copy), GitHub Copilot (code completion), and Harvey (legal research) are building vertical-specific AI assistants that understand domain context and integrate with existing workflows.

Customer service is being transformed by startups like Intercom's Fin and Ada, which use GPT-4 to handle support tickets with remarkable accuracy. These applications combine foundation models with company-specific knowledge bases to provide contextual, accurate responses.

In productivity software, Notion AI and the newly launched Microsoft 365 Copilot are embedding AI directly into tools people use daily. These integrations feel less like "AI products" and more like natural extensions of existing software, suggesting where the market is heading.

Creative tools represent another major category. Runway, Synthesia (video generation), and ElevenLabs (voice cloning) are pushing boundaries in content creation, while Midjourney continues to dominate AI image generation despite being primarily Discord-based.

The Integration Question

A fascinating tension exists in this stack: will foundation model providers integrate vertically into applications, or will independent startups maintain defensible positions? OpenAI's recent plugins announcement and custom instructions suggest vertical integration, while Microsoft's aggressive embedding of AI across its product suite demonstrates how large platforms can leverage foundation models.

Application startups are building moats through proprietary data, workflow integration, domain expertise, and trust relationships with customers. However, the "thin wrapper" concern is real—any application that simply adds a prompt template on top of GPT-4 faces commoditization risk.

Looking Ahead

This stack is still crystallizing. We're likely to see consolidation at the infrastructure layer as clear winners emerge, while the application layer should continue fragmenting into increasingly specialized tools. The winners will be companies that create genuine value beyond model access—whether through unique data, superior user experience, deep domain knowledge, or network effects.

As we move through the second half of 2023, the AI stack is beginning to resemble mature technology ecosystems, with clear layers, specialized players, and emerging standards. For founders and investors, understanding where value accrues across these layers will be crucial for navigating this transformative wave.