Templates, Variables, and Dynamic Context

Learn how to build production-ready AI systems using programmatic prompting techniques. Master template design with variables, slot-filling patterns, dynamic context injection, and retrieval-augmented generation. Discover how treating prompts as code—with version control, type safety, and automated testing—creates maintainable, scalable AI applications.

4/15/20243 min read

If you're still copying and pasting prompts into a chat interface, you're missing the real power of AI integration. Professional AI applications don't use static prompts—they build them dynamically from code, injecting real-time data, user context, and retrieved information into carefully designed templates. This approach, called programmatic prompting, is how you transform AI from a playground experiment into a production-ready system component.

From Static Text to Dynamic Templates

Static prompts work fine for one-off tasks, but they collapse under real-world requirements. Imagine a customer support application that needs to reference user account details, order history, and current ticket context. You can't hardcode this information—it changes with every interaction.

Programmatic prompting treats your prompt as a template with variables that get filled at runtime. Instead of writing a complete prompt, you create a structure with placeholders:

template = """ You are a support agent for {company_name}.

Customer: {customer_name} (Account ID: {account_id})

Issue: {ticket_subject}

Previous context: {conversation_history}

Based on this customer's history: {order_summary}

Provide a helpful, personalized response addressing their concern.

"""

At runtime, your application populates these variables with actual data from your databases, APIs, and user sessions. Each customer gets a personalized prompt built specifically for their situation, but your core template remains clean and maintainable.

Slot-Filling Patterns

Different parts of your prompt serve different purposes, and they change at different frequencies. Smart template design recognizes this through slot-filling patterns.

The system slot contains your core instructions and personality—this rarely changes. The context slot holds relevant background information specific to each request. The user slot contains the actual user query. The constraint slot might include time-sensitive rules or temporary policies.

Separating these concerns makes your prompts maintainable. When you need to update your AI's personality, you modify only the system slot. When regulations change, you update the constraint slot without touching other components. This modularity prevents the tangled mess that comes from monolithic prompt strings.

Retrieving and Injecting Context

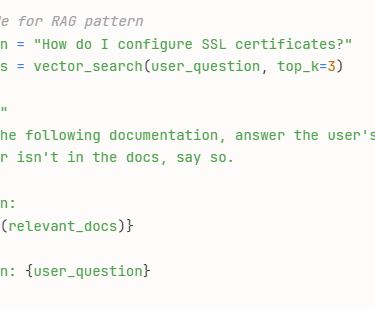

The most powerful programmatic prompts pull information from external sources right before building the final prompt. This pattern, often called Retrieval-Augmented Generation (RAG), grounds your AI in current, accurate information.

Consider a documentation assistant. When a user asks a question, your application:

Converts the question to a search query

Retrieves relevant documentation sections from your vector database

Injects those sections into a template

Sends the enriched prompt to the AI

This approach keeps your AI responses accurate and current without retraining models. When your documentation updates, responses automatically reflect the new information.

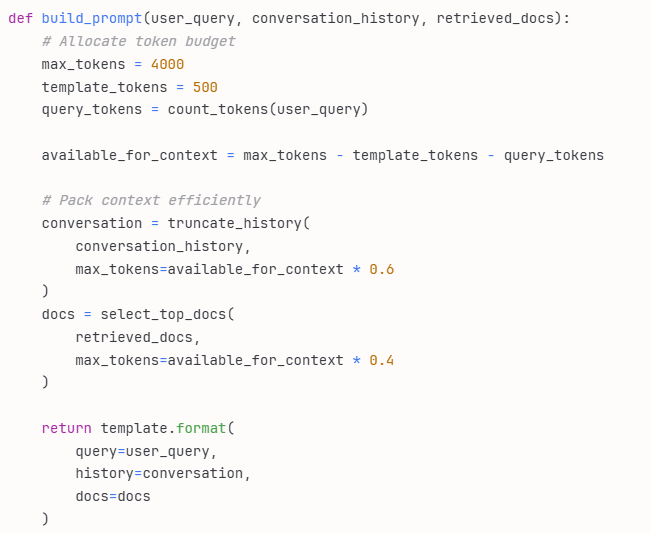

Managing Long Contexts

Real applications quickly encounter context length limits. User conversation histories grow long. Retrieved documents might be extensive. Your template needs thousands of tokens, but the model has a maximum context window.

Programmatic prompting gives you tools to manage this. Implement intelligent truncation: keep the most recent conversation turns and summarize older ones. Rank retrieved documents by relevance and include only the top results. Use token counting libraries to measure your prompt before sending it, trimming components as needed.

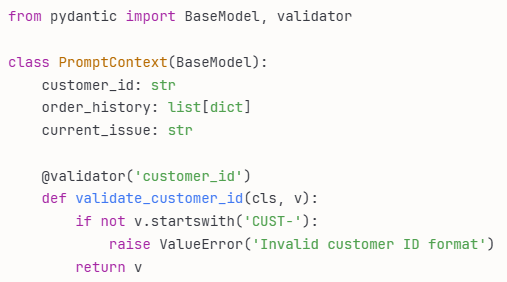

Type Safety and Validation

When prompts are code, they benefit from software engineering best practices. Use type systems to ensure variables are correctly formatted. Validate inputs before injection to prevent malformed prompts or injection attacks.

Python's type hints and Pydantic models work beautifully for this:

This catches errors before they reach your AI, preventing cryptic failures and improving reliability.

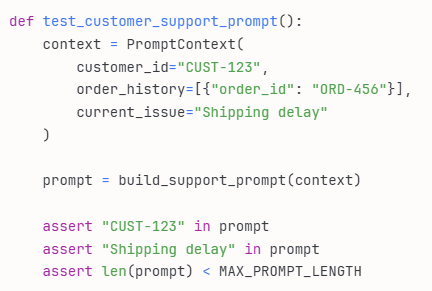

Version Control and Testing

The greatest advantage of programmatic prompting is treating prompts like any other code. Store templates in version control. Write unit tests that verify template rendering with various inputs. Create test fixtures with known-good examples. Run regression tests to ensure prompt changes don't break existing functionality.

Building for Scale

Programmatic prompting isn't just about technical elegance—it's about building AI systems that scale. When prompts are code, they're maintainable, testable, and collaborative. Multiple developers can work on different template components. Changes are reviewable through pull requests. Bugs are reproducible and fixable.

The chat interface is where AI experimentation begins, but programmatic prompting is where production AI systems are built. Stop pasting, start coding, and unlock the full potential of AI in your applications.