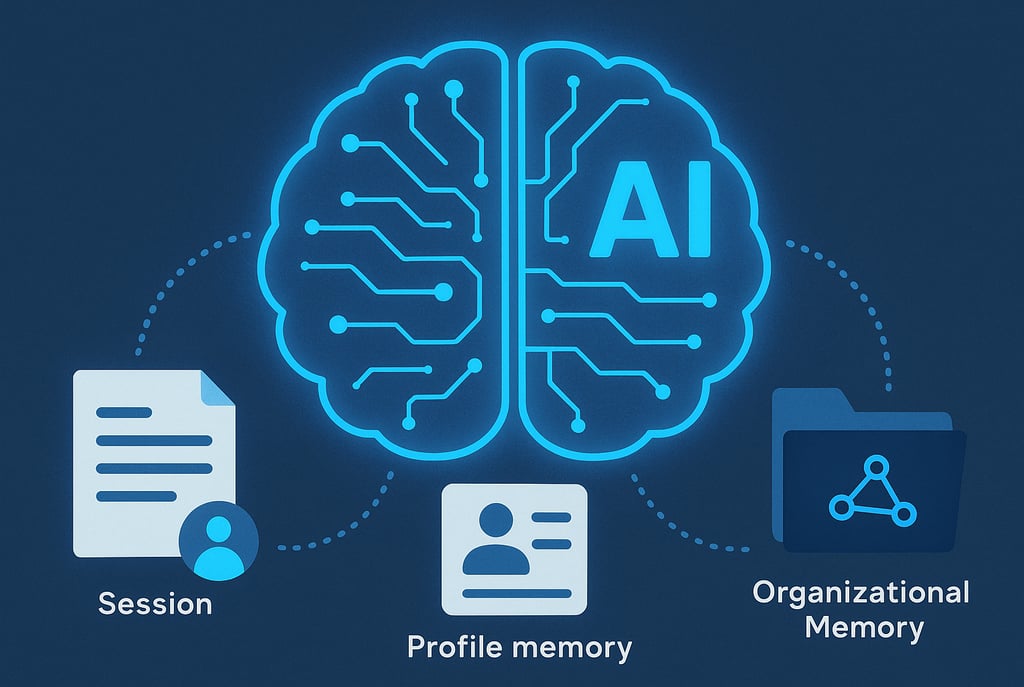

Session, Profile, and Organizational Memory

AI products rely on memory to be useful. This article breaks down the three essential layers—Session, Profile, and Organizational Memory—and the specific governance challenges each introduces. Learn how to architect transparent long-term memory systems with built-in privacy controls, retention policies, and auditability to build powerful, compliant AI products in 2025.

1/27/20253 min read

The true intelligence of an AI product is not defined by its core Large Language Model (LLM), but by its memory architecture. A stateless LLM is like a brilliant expert with amnesia; it gives a perfect answer to the immediate question but forgets everything you said a moment ago. To transition from a novelty chatbot to a transformative tool, AI products must incorporate layers of memory that allow them to recall, adapt, and learn over time.

This layered memory approach is essential for coherence, personalization, and enterprise utility. However, the act of remembering user and company data introduces significant risks concerning privacy, compliance, and governance. Architects must design these memory layers with meticulous attention to boundaries and auditability.

We can break down effective AI memory into three crucial layers: Session, Profile, and Organizational.

1. Session Memory: Coherence and Context

Session Memory is the AI’s short-term working memory, maintaining context within a single, continuous interaction (a chat thread, a single task flow).

What it is: The conversation history, including user prompts and model responses, passed back into the context window for subsequent turns. It enables coherent dialogue and multi-step reasoning. For very long conversations, this memory is often summarized or condensed to fit within the LLM's context window—a technique known as a Summary Buffer.

Where it lives: Typically stored in transient application state, a Redis cache, or a short-lived database record.

The Governance Trap: Even short-term memory can capture sensitive data or intent.

Design Principle: Implement a Time-to-Live (TTL). Session memory must have a deterministic expiration date (e.g., a few hours, or immediately upon session close). For applications handling highly sensitive data, offer an ephemeral or "Memory-Free" mode where no session history is stored or persisted at all.

2. Profile Memory: Personalization and Adaptability

Profile Memory (or User-Specific Memory) is the persistent, long-term store of facts, preferences, and key behaviors associated with an individual user or entity.

What it is: Data that enables personalization. Examples include: a user's role (e.g., "Director of Marketing"), stated preferences (e.g., "always use a formal tone," "prefers Italian cuisine"), saved entities (e.g., project names, frequent contacts), and summaries of past successful interactions (Episodic Memory).

Where it lives: Stored in a durable database, often a Vector Database (for semantic retrieval of facts) or a standard key-value store (for structured preferences).

The Governance Trap: This layer is a data privacy minefield because it explicitly links behavioral data to an identity, creating a rich behavioral dataset that can be used for profiling or training.

Design Principle: Transparency and Control. Users must have complete visibility and direct control over their Profile Memory. This means providing an easily accessible "Memory Dashboard" where users can view, edit, or selectively delete what the AI remembers about them. Implement purpose limitation gates—ensure memory collected for Task A cannot be automatically used to inform an action for Task B without explicit permission.

3. Organizational Memory: Factual Grounding and Corporate Knowledge

Organizational Memory is the shared, persistent knowledge base that grounds the AI's responses in company facts, policies, and procedures.

What it is: The corporate knowledge base, implemented almost exclusively via Retrieval-Augmented Generation (RAG). It includes internal documents, compliance manuals, HR policies, technical specifications, and historical data, which the AI retrieves and uses as context.

Where it lives: Highly centralized data systems, typically Vector Databases for retrieval alongside the source documents in secure file storage.

The Governance Trap: The risk here is not just privacy, but data security and consistency. Retrieval failures can lead to hallucinations (inaccurate facts), and uncontrolled data access can lead to data leakage across user groups.

Design Principle: Policy-Bound Memory and Granular Access. Every document (memory item) must have embedded metadata tags defining its sensitivity (PII, Confidential, Public) and its allowed audience/role. The retrieval system must strictly enforce access controls before injecting the context into the LLM. Furthermore, ensure all memory operations (retrieval and use) are logged and auditable to maintain a clear chain of accountability and compliance.

The Governance-First Blueprint for Memory

Integrating these memory layers moves an AI product from a novelty to an indispensable tool. But this cannot happen without a Governance-First mindset:

Default to Forgetting: Memory should have a default, short retention limit unless explicitly extended by a clear business need and user consent. Unbounded memory is liability.

Segregate and Secure: Use distinct databases or memory namespaces for each layer (Session, Profile, Org) and apply different encryption, access controls, and retention policies to each.

Audit Everything: Implement memory observability—a mechanism to audit what was recalled, when, and why for any given answer, ensuring full traceability back to the source data and its access rules.

By architecting these layers intentionally, teams can deliver the personalized, coherent AI experiences users expect, without inadvertently creating a massive, ungovernable data hoard.