Multi-Model Orchestration, Using the Right Model for the Right Job

The future of AI is multi-model. Learn how to design an orchestration layer that intelligently routes user requests to the fast/cheap, reasoning-heavy, or domain-specialized models. This analysis breaks down routing strategies, the critical cost/latency trade-offs, and essential fallback mechanisms for building high-performing, cost-efficient, and reliable AI applications in 2025.

2/3/20253 min read

The idea that one Large Language Model (LLM) can be the perfect engine for every task in an enterprise is officially obsolete. The evolution of AI has led to a highly fragmented, yet specialized, landscape: we now have ultra-fast, cheap models, powerful but slow reasoning models, and highly specialized, domain-specific models.

The challenge of 2025 is not finding the best LLM; it’s building the Multi-Model Orchestration Layer that intelligently routes each incoming request to the most appropriate model. This orchestration layer acts as the "traffic cop" or "planner agent" for your AI applications, enabling you to optimize simultaneously for cost, latency, and quality.

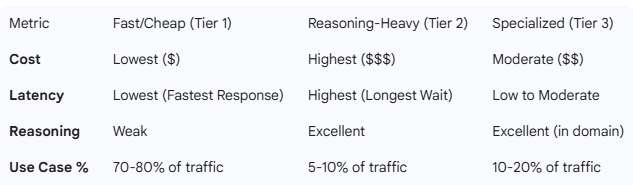

The Three-Tier Model Portfolio

An effective multi-model strategy starts with defining your model portfolio across three core tiers, each assigned a specific role in the system:

1. Fast, Cheap Models (The Workhorses)

These are often smaller, distilled models or highly optimized versions of larger ones (e.g., GPT-3.5 variants, Mistral, or smaller open-source models).

Role: Handle high-volume, low-complexity tasks where speed is paramount.

Use Cases: Simple summarization, sentiment analysis, greetings/chit-chat, content rephrasing, and classifying the intent of an incoming request (the routing task itself).

Trade-Off: Lowest cost, highest speed, but weaker reasoning and higher propensity for factual errors on complex queries.

2. Reasoning-Heavy Models (The Experts)

These are the frontier, large-context models (e.g., GPT-4 class, Claude Opus, Gemini Ultra). They offer superior logic, complex instruction following, and higher factual accuracy.

Role: Reserved for high-value, complex, or critical tasks that require deep reasoning or multi-step planning.

Use Cases: Complex code generation, legal analysis/comparison, market research synthesis, multi-step agentic planning, and acting as a final validator for outputs from Tier 1 models.

Trade-Off: Highest cost, highest latency, but the highest quality and reliability.

3. Domain-Specialized Models (The Specialists)

These are models (often Small Language Models, or SLMs) that have been fine-tuned on specific, proprietary datasets.

Role: Achieve expert-level accuracy and consistency in a narrow field.

Use Cases: Parsing highly structured data (e.g., invoice data extraction, specific legal clause identification), technical terminology translation, or executing tasks that rely on deep, internal knowledge.

Trade-Off: High accuracy within their domain, can often be cheaper than Tier 2, but fail completely when taken outside their scope.

Intelligent Routing Strategies

The core of multi-model orchestration is the Router LLM or a Classifier Model. This component intercepts every user request and decides its destination.

Intent Classification: A small, fast LLM (Tier 1) processes the initial user prompt and categorizes its intent (e.g., "Summarize," "Generate Code," "Check Policy"). The router then sends the request to the appropriate downstream model group.

Complexity Scoring: Requests can be scored based on length, number of internal questions, or required steps. Low-complexity scores go to the cheap model (Tier 1). High-complexity scores are routed to the powerful reasoning model (Tier 2).

Keyword/Metadata Matching: Queries containing proprietary keywords, technical jargon, or PII tags can be automatically routed to a Domain-Specialized Model (Tier 3) or a secure, on-premise endpoint to ensure data governance.

Confidence-Based Routing: A prompt can first be sent to a cheaper model (Tier 1). If the model's self-assessment of its answer's confidence is low, or if the output fails a simple check (e.g., malformed JSON), the request is automatically rerouted to the more powerful Tier 2 model for a second attempt.

Cost, Latency, and Quality Trade-Offs

The goal of routing is to find the sweet spot on the cost-performance curve for every request.

By routing 70-80% of the daily traffic to the inexpensive models, a system can maintain high performance and low operational costs, reserving the premium models only for the critical, high-value tasks that justify the expense. This strategic approach can result in 40-80% reduction in overall inference costs compared to running everything on a single, large model.

Building Reliability with Fallbacks

Robust orchestration requires fallback mechanisms to ensure service continuity.

Provider Outage Fallback: If the API for the primary Tier 2 model is down (e.g., rate limit exceeded or external outage), the router must automatically attempt a different, compatible Tier 2 model from a different vendor.

Model Failure Fallback: If any model returns a corrupted response (e.g., fails to produce valid JSON as requested, or times out), the request should be retried with the original model or immediately passed to a higher-tier model as a definitive corrective step.

Latency Guardrails: For real-time applications, a hard latency cap can be enforced. If the primary model doesn't respond within the specified Service Level Objective (SLO), the request is canceled and rerouted to a faster, though potentially less accurate, model.

Multi-model orchestration is the foundation of scalable, cost-effective, and reliable AI in production. It replaces the rigidity of a single model with the flexibility of a compound AI system, allowing teams to deliver high quality where it matters most, without incurring unnecessary expense for simple tasks.