Building on Top of LLM APIs

This blog introduces developers to building on top of Large Language Model APIs, showing how to treat LLMs as a new backend capability for text understanding, generation, and transformation. It covers common product patterns (assistants, content tools, extraction, and RAG), key design principles for prompts, context, and validation, and a simple starter architecture for adding LLM-powered features to real-world applications.

3/6/20232 min read

At a high level, an LLM API is just a function:

Input: instructions + context (the prompt)

Output: structured or unstructured text (answer, code, JSON, summary, etc.)

You don’t train the model; you compose around it. Your job is to:

Tell it who it is (e.g., “You are a helpful support assistant for product X…”).

Tell it what to do (e.g., “Answer the user’s question using the documentation below…”).

Tell it how to respond (e.g., “Return JSON with fields: answer, confidence, suggested_links.”).

Once you see it this way, an LLM is just another microservice—one that happens to be very good at language.

Common Product Patterns You Can Build

Here are some realistic patterns you can ship quickly:

Chat-style Assistants

Customer support bots grounded in your FAQ and docs

Internal assistants for HR/IT over company policies

Dev-doc bots that answer questions from your API reference

Content and Communication Helpers

Drafting emails, release notes, product descriptions

Summarizing tickets, calls, or long reports

Rewriting text for different tones or audiences

Extraction and Classification Pipelines

Turn messy text into structured JSON (entities, labels, categories)

Auto-tag incoming tickets, leads, or documents

Classify sentiment, urgency, or topic

RAG: Search + LLM

Search your own data (Elasticsearch, Postgres, vector DB)

Feed the most relevant chunks into the prompt

Let the LLM generate grounded answers using that context

Each of these is “just” normal app logic + an LLM call at the right moment.

Designing Around an LLM API

To use an LLM effectively, a few principles help:

1. Treat Prompts Like Code

Prompts are logic. Version them, keep examples, and don’t be afraid to A/B test different prompt styles. Small changes (“step-by-step,” “respond in JSON”) can drastically improve reliability.

2. Control the Context

Models don’t automatically “see” your data. You must decide:

What to fetch (top search hits, last N messages, user profile)

How much to include (token limits are real)

How to format it (clear section labels, delimiters, etc.)

Good context plumbing is half the battle.

3. Validate the Output

Never assume the model returned exactly what you asked for.

Ask for strict formats (JSON, markdown sections).

Parse and validate before trusting.

Add fallbacks: if parsing fails, fall back to a generic answer or a human review path.

4. Mind Cost and Latency

Every call spends tokens and time. Cache where possible, choose smaller models for simple tasks, and design UX that tolerates AI response times.

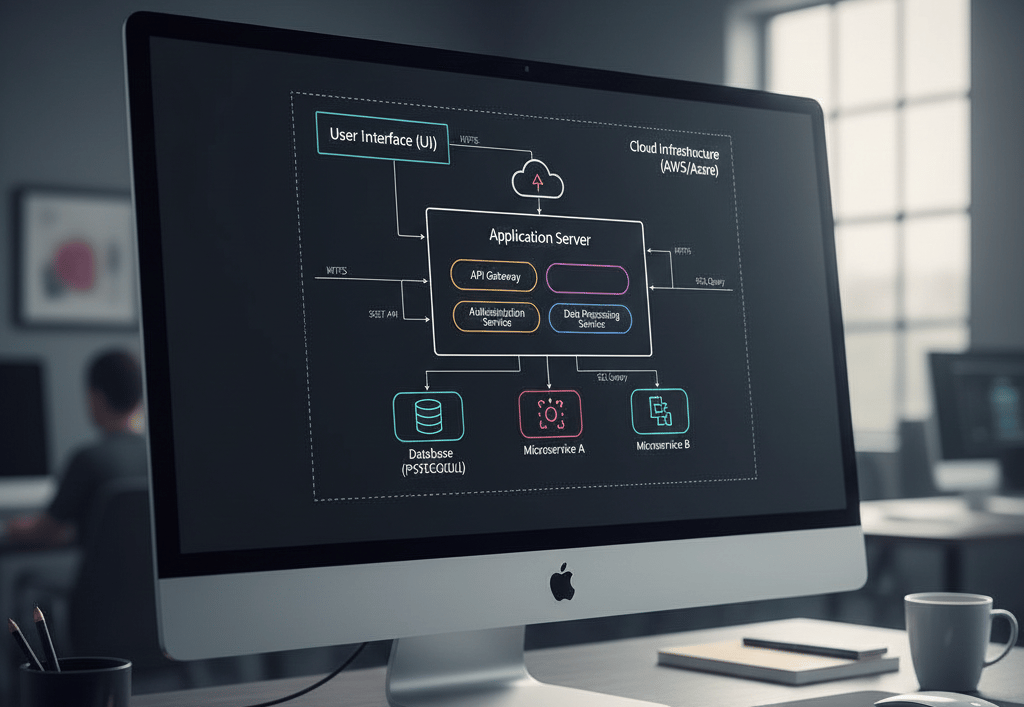

A Simple Starter Architecture

A basic LLM-powered feature often looks like this:

Frontend – chat box or form.

Backend – receives the request, fetches relevant data, builds a prompt, calls the LLM API.

Post-processing – validate, extract structured fields, and return a clean response (or trigger actions).

From there you can layer on: authentication, logging, analytics, prompt versioning, and human-in-the-loop review.

LLM APIs don’t require you to become an ML researcher. They invite you to think of “language understanding and generation” as just another service in your architecture. Once you start treating them like a backend building block—alongside databases, queues, and payments—you unlock a new class of features that used to be out of reach for most teams.